Apache NiFi vs Pentaho Data Integration: Choosing the Best Fit for Your Business

![]()

Imagine your business data as a river, constantly flowing, ever-changing, and essential to powering your decisions. Now imagine needing to direct that river across multiple landscapes, cloud, on-prem, databases, and APIs, without letting it spill, stagnate, or overflow.

That’s where data integration tools like Apache NiFi and Pentaho Data Integration (PDI) come in. They act as the engineers of your data pipelines, but they build them very differently.

NiFi, born from the halls of the NSA, excels in real-time, event-driven architectures with its easy-to-use flow-based interface. PDI, on the other hand, is like a Swiss Army knife for batch ETL, flexible, mature, and built for detailed transformations.

Choosing between them isn’t about which is better overall; it’s about which is better for your business. In this blog, we’ll dive deep into Apache NiFi and Pentaho comparison, understand what makes each tool unique, and help you decide which one to trust with the flow of your data.

What is Apache NiFi?

Apache NiFi is an open-source data integration and workflow automation tool developed by the Apache Software Foundation. Originally created by the U.S. National Security Agency (NSA) and later contributed to the open-source community, NiFi is designed to automate the flow of data between systems, enabling real-time data ingestion, transformation, and routing.

NiFi employs a web-based, drag-and-drop interface that allows users to design data pipelines visually. Its architecture is based on flow-based programming, which facilitates the creation of complex data flows with ease. Key features include data provenance tracking, back-pressure mechanisms, and support for clustering to ensure scalability and reliability.

What is Pentaho Data Integration?

Pentaho Data Integration (PDI), also known as Kettle, is an open-source ETL (Extract, Transform, Load) tool that is part of the Pentaho suite developed by Hitachi Vantara. PDI provides a graphical interface for designing data transformation workflows, enabling users to extract data from various sources, transform it as needed, and load it into target systems.

PDI supports a wide array of data sources, including relational databases, flat files, and big data platforms. Its drag-and-drop interface, known as Spoon, allows users to build complex data integration jobs without the need for extensive coding. PDI also offers components like Kitchen and Pan for executing jobs and transformations, and Carte for remote execution.

Apache NiFi vs Pentaho Data Integration: Decoding the Key Differences

Let’s shed light on the data integration tools comparison.

| Parameters | Apache NiFi | Pentaho Data Integration |

| Primary Use Case | Real-time data flow automation and event-driven pipelines. | Batch ETL (Extract, Transform, Load) for data warehousing and business intelligence. |

| Processing Model | Flow-based programming for continuous streaming and event-driven processing. | Job-based processing for batch-oriented ETL workflows. |

| Interface | Web-based UI with drag-and-drop canvas to design data flows visually. | Desktop application (Spoon) with drag-and-drop design of transformations and jobs. |

| Data Flow Handling | Handles asynchronous, non-blocking flows with features like back pressure and queue prioritization. | Executes transformations in a defined sequence, often used for structured, step-by-step operations. |

| Performance Orientation | Optimized for low-latency, high-throughput streaming data pipelines. | Optimized for high-volume batch processing and complex data transformations. |

| Real-time Capability | Native support for real-time and streaming data. | Primarily batch processing, with limited real-time capabilities. |

| Extensibility | Highly extensible with custom processors and built-in integrations (Kafka, MQTT, etc.). | Supports plugins and scripting but may require additional configuration for custom extensions. |

| Data Provenance | Built-in, end-to-end data lineage tracking for audit and debugging. | Lineage is available but not as granular or intuitive as NiFi’s native provenance system. |

| Cloud & Big Data Support | Integrates well with cloud platforms, Hadoop, and streaming engines. | Supports cloud and big data integrations, but setup can be more involved. |

| Ease of Deployment | Simple to deploy and configure using its web-based interface and templates. | Requires more setup and system resources; generally heavier in deployment. |

| Learning Curve | Easier for users familiar with visual workflows and real-time data. | Moderate to steep for complex transformations and job orchestration. |

| Licensing | Open-source under Apache License 2.0. | Open-source with commercial options under Hitachi Vantara. |

Apache NiFi vs Pentaho Data Integration – Key Features

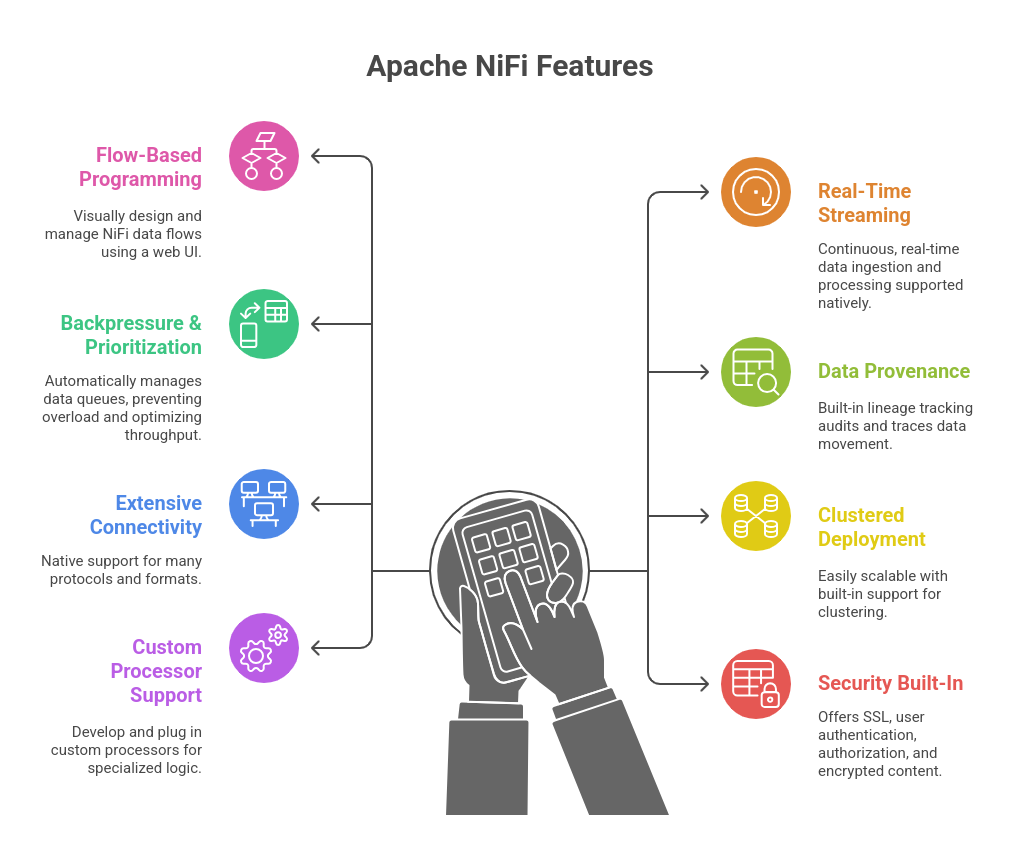

Key Features of Apache NiFi

- Flow-Based Programming: Design and manage data flows visually using a drag-and-drop web UI.

- Real-Time Data Streaming: Supports continuous, real-time data ingestion and processing.

- Backpressure & Prioritization: Automatically manages data queues to prevent overload and optimize throughput.

- Data Provenance: Built-in lineage tracking to audit and trace every data movement and transformation.

- Extensive Connectivity: Native support for a wide range of protocols and formats (Kafka, MQTT, HTTP, S3, HDFS, FTP, etc.).

- Clustered Deployment: Easily scalable with built-in support for clustering and distributed processing.

- Custom Processor Support: Develop and plug in custom processors for specialized logic.

- Security Built-In: Offers SSL, user authentication, authorization, and encrypted content.

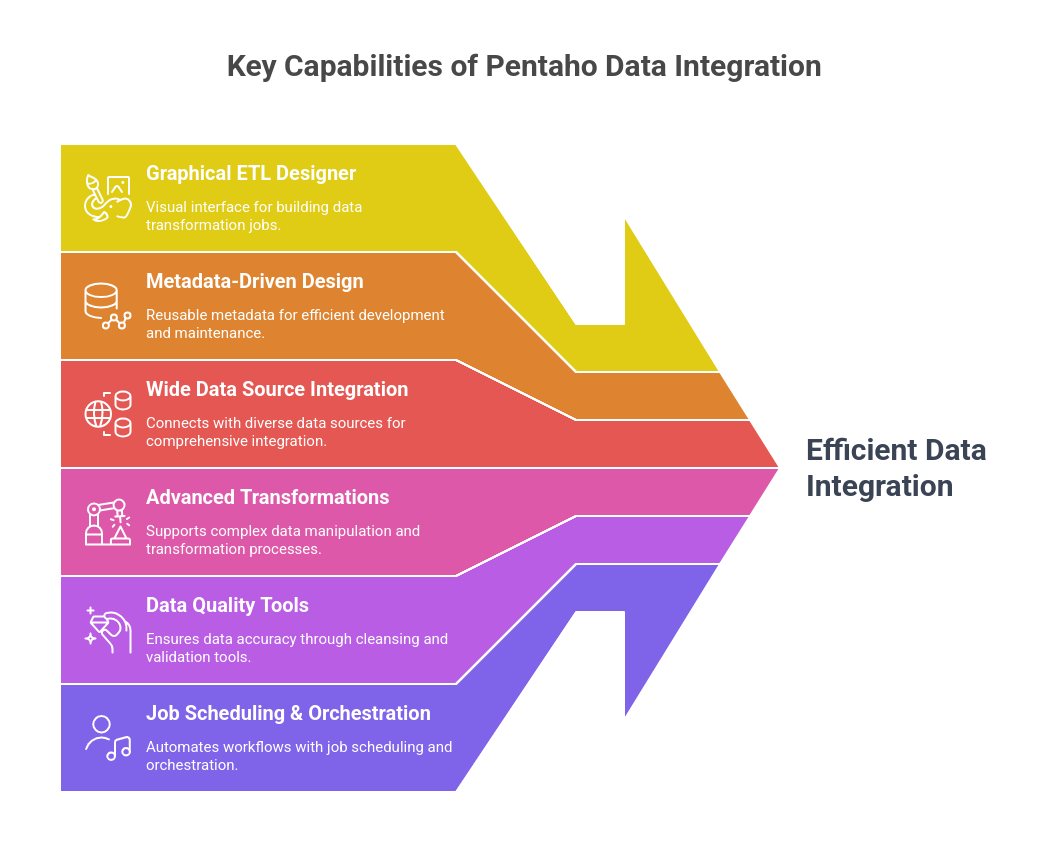

Key Features of Pentaho Data Integration

- Graphical ETL Designer: Spoon provides a powerful visual interface to build data transformation jobs.

- Metadata-Driven Design: Reusable metadata and transformations for efficient development and maintenance.

- Wide Data Source Integration: Connects with relational databases, flat files, cloud services, big data platforms, and more.

- Advanced Transformations: Supports filtering, merging, joining, and complex data manipulation.

- Data Quality Tools: Built-in steps for cleansing, deduplication, validation, and enrichment.

- Job Scheduling & Orchestration: Schedule and automate complex workflows using the Job designer.

- Big Data & Hadoop Integration: Built-in steps to work with Hadoop, Spark, Hive, and HDFS.

- Custom Scripting: Add custom logic using JavaScript, Java, or shell scripts.

- Remote Execution: Use the Carte server to run transformations remotely and distribute workloads.

Apache NiFi vs Pentaho Data Integration – Pros and Cons

Pros of Apache NiFi

- Great for real-time data: Perfect for streaming and event-based systems like IoT and logs.

- User-friendly UI: Intuitive drag-and-drop interface makes it easy to build and monitor NiFi data flows.

- Built-in data tracking: Data provenance lets you see exactly where data came from and how it was handled.

- Highly flexible: Easily integrates with various systems and supports custom processors.

- Scalable and distributed: Can run across clusters for large-scale data pipelines.

Cons of Apache NiFi

- Not built for complex transformations: Better for routing, filtering, and lightweight processing.

- Limited governance: Lacks robust data quality, cataloging, and metadata management tools.

- Harder to manage NiFi data flows across environments: Deploying flows from dev to prod requires extra manual steps and complex Ansible scripts.

- Canvas clutter: Large or unorganized flows can become hard to manage visually.

Is your NiFi team spending ample time deploying NiFi data flows across multiple clusters? Manual deployment of NiFi data flows is time-consuming, error-prone, and resource-intensive, ultimately affecting your business operations.

Data Flow Manager emerges as a robust tool to overcome the challenges of manual NiFi data flow deployment and promotion. You can create, deploy, and promote NiFi data flows in minutes, without requiring the NiFi UI and controller services.

Pros of Pentaho Data Integration

- Powerful ETL capabilities: Excellent for data cleansing, enrichment, and transformation in batch jobs.

- Supports advanced logic: Handles complex business rules and multi-step workflows with ease.

- Rich data source support: Connects to nearly every major database, file format, and cloud system.

- Reusability and metadata: Promotes efficient development through reusable components and metadata-driven design.

- Ideal for data warehousing: Great for preparing analytics-ready datasets.

Cons of Pentaho Data Integration

- Not ideal for real-time use cases: Primarily designed for batch jobs and scheduled ETL.

- Steeper learning curve: Takes time to get familiar with all components and best practices.

- Resource-heavy setup: Can be heavier on system requirements, especially for large deployments.

- UI feels dated: Spoon is powerful but doesn’t feel as modern or smooth as some newer tools.

Apache NiFi vs Pentaho Data Integration – Use Cases

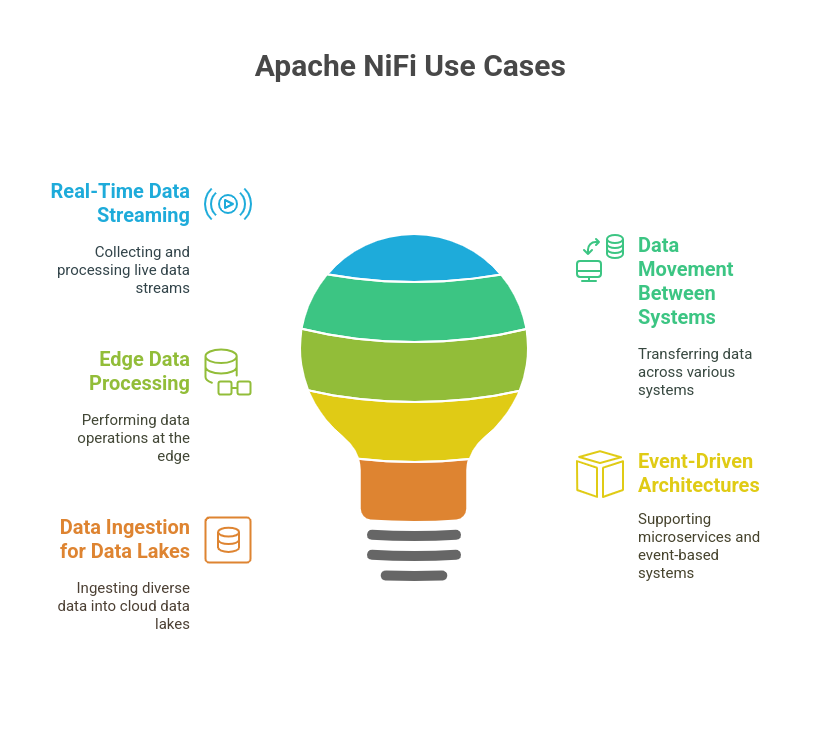

Apache NiFi Use Cases

- Real-Time Data Streaming: Ideal for collecting, routing, and processing live data streams from IoT devices, logs, sensors, or APIs.

- Data Movement Between Systems: Seamlessly transfers data between on-prem, cloud, and hybrid systems (e.g., from Kafka to HDFS or S3).

- Edge Data Processing: Performs lightweight filtering, transformation, and enrichment at the edge before sending to the cloud.

- Event-Driven Architectures: Fits perfectly into microservices and event-based systems with support for backpressure and prioritization.

- Data Ingestion for Data Lakes: Ingests diverse data types into cloud-based data lakes like AWS S3, Azure Data Lake, or Hadoop.

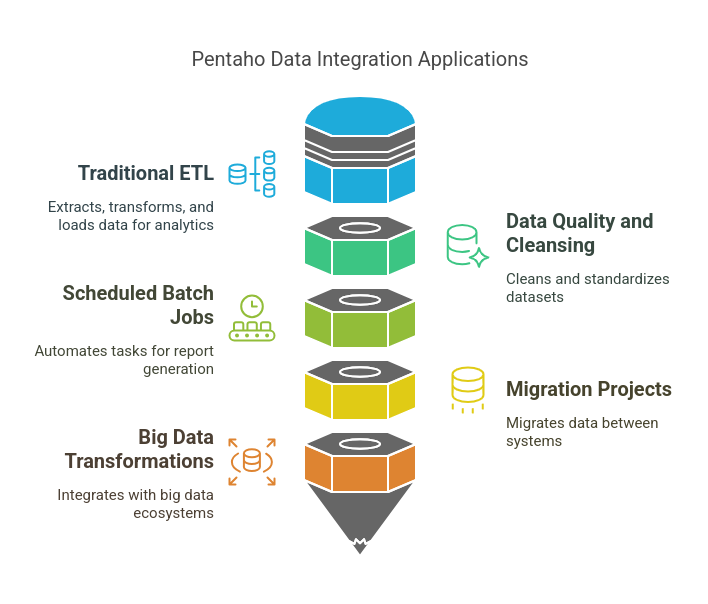

Pentaho Data Integration – Use Cases

- Traditional ETL for Data Warehousing: Extracts, transforms, and loads data into structured data warehouses for analytics and BI.

- Data Quality and Cleansing: Cleans, deduplicates, and standardizes datasets before analytics or storage.

- Scheduled Batch Jobs: Automates nightly or hourly jobs for report generation, data syncing, or reconciliation.

- Migration Projects: Useful for migrating large datasets between systems or modernizing legacy data platforms.

- Big Data Transformations: Integrates with Hadoop, Spark, and other big data ecosystems for high-volume transformations.

Conclusion

Choosing between Apache NiFi and Pentaho Data Integration (PDI) ultimately comes down to your specific data needs.

If your priority is real-time data movement, stream processing, or flexible integration between diverse systems, Apache NiFi is a solid choice. Its visual interface, built-in data provenance, and ease of flow management make it a favorite for modern, event-driven architectures.

On the other hand, if you’re focused on complex data transformations, data warehousing, or batch ETL workflows, Pentaho Data Integration offers powerful capabilities. Its metadata-driven design and transformation depth are well-suited for analytics and BI initiatives.

If Apache NiFi aligns with your business requirements, Data Flow Manager is a must-have tool. It is a powerful tool designed for on-premise NiFi to deploy, promote, and create flows within minutes. It comes with other amazing features, such as an AI-powered flow creation assistant, scheduled NiFi data flow deployments, version control, audit logs, and easy rollback. Apache NiFi and Data Flow Manager together form a winning combination for your data initiatives.

FAQs

- Is NiFi good for ETL?

Yes, Apache NiFi is effective for ETL, especially when the focus is on data ingestion, routing, and lightweight transformations in real-time. While it may not support complex transformations as robustly as traditional ETL tools, NiFi excels in moving and processing streaming data efficiently across multiple systems.

- Is Apache NiFi a data integration tool?

Absolutely. Apache NiFi is a powerful data integration and flow automation tool. It allows you to collect, transform, and move data across a wide range of systems using a visual interface. Its support for numerous protocols and formats makes it highly versatile for integrating data in hybrid and distributed environments.

- Is Apache NiFi low code?

Yes, Apache NiFi is considered a low-code platform. It features a drag-and-drop UI for building data flows without writing much code. While it supports custom scripting for advanced use cases, most users can create powerful pipelines through configuration alone, making it accessible to both developers and non-developers.

![]()