Enhancing Data Resilience: Strategies for Business Continuity in the Face of Disruptions

![]()

In today’s digital-first world, data drives every decision, transaction, and customer interaction. Enterprises are under growing pressure to ensure that their data systems remain available, consistent, and secure, even in the face of unpredictable disruptions. Whether it’s a cloud outage, system misconfiguration, or cyberattack, the ability to maintain seamless data operations can be the difference between business continuity and chaos.

This blog explores how data resilience can be strategically built into your data flow architecture using Apache NiFi and why automation is critical in this journey.

Let’s dive in.

Understanding Data Resilience in the Modern Enterprise

Data resilience is the strategic capability of an organization to maintain continuous, reliable, and secure data operations despite disruptions, failures, or unexpected events. It goes far beyond traditional backup and recovery. True resilience lies in the design of fault-tolerant architectures, automated workflows, and proactive governance that ensure data pipelines remain functional, accurate, and accessible under pressure.

For C-level executives, data resilience directly correlates with business continuity and operational excellence. It minimizes downtime, accelerates recovery, and builds stakeholder trust. When resilience is embedded into the core of data operations, analytics platforms remain operational, customer experiences stay seamless, and regulatory compliance is never compromised, no matter the circumstances.

In a world where data is a competitive asset, resilience is not a technical luxury—it’s a business necessity.

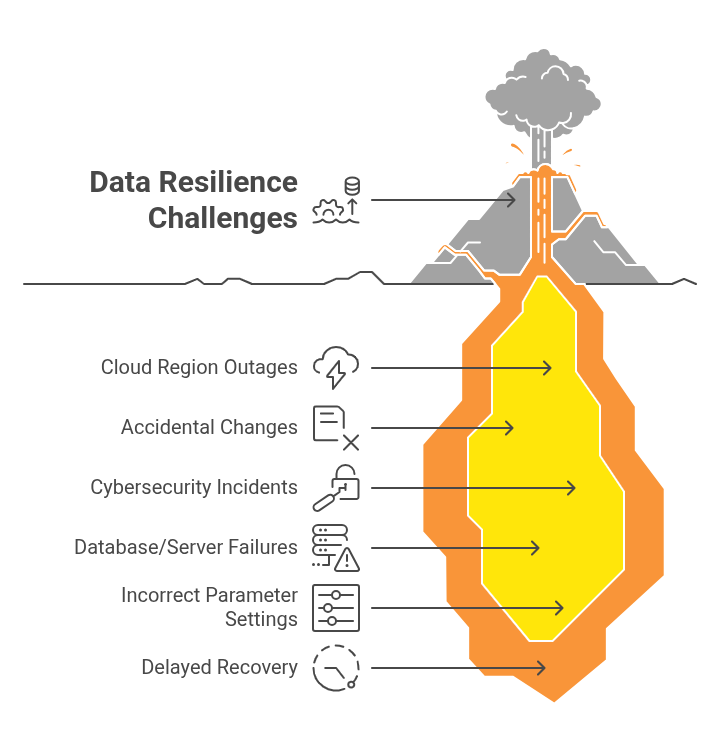

Common Disruptions That Threaten Data Continuity

Modern data ecosystems are sprawling and complex, with dependencies across cloud, on-premises, APIs, and third-party systems. A failure in any link of this chain can impact data operations.

Here are some of the most common threats:

- Cloud region outages affecting connectivity to data lakes or APIs

- Accidental changes in flow configurations during deployment

- Cybersecurity incidents like ransomware attacks

- Database or server failures, causing loss of intermediate data

- Incorrect parameter settings across environments, leading to broken flows

- Delayed recovery due to manual rollback procedures

Each of these threats can grind analytics and data-driven applications to a halt, affecting business agility and revenue.

The Limitations of Traditional Data Flow Management in Apache NiFi

While Apache NiFi offers a user-friendly, visual approach to building data pipelines, its data flow deployment and lifecycle management processes often lag behind modern software engineering practices.

Here are the key pain points:

- Manual Export/Import: Deploying NiFi data flows across Development, QA, and Production typically requires exporting templates or versioned flows, which must then be re-imported and manually reconfigured in each target environment.

- Environment-Specific Variables: Each environment uses different parameters like API tokens, endpoint URLs, or credentials, making manual reconfiguration slow and error-prone.

- Lack of Standardization: Without strict versioning or automation, inconsistencies creep in, leading to hard-to-detect bugs and unreliable deployments.

- Time-Consuming Rollbacks: If something breaks in production, restoring a previous flow version can be a manual, guesswork-heavy process.

- Cross-Team Friction: Without automation, there’s a reliance on tribal knowledge and a heavy dependency on a small group of engineers, slowing handoffs between teams.

These issues compound over time, eroding trust in data pipelines and slowing down time-to-market for data products.

Key Pillars of a Resilient Data Architecture

Building resilience isn’t just about having backups – it’s about designing your data workflows and infrastructure to anticipate, absorb, and adapt to disruptions.

Here are the foundational pillars:

1. Automation

Automated NiFi data flow deployment, promotion, and rollback reduce human error and improve consistency. Automation ensures that even under stress, flows behave predictably.

2. Environment-Aware Deployments

Using environment-specific configurations stored in parameter contexts ensures that the same NiFi data flow can work across Dev, QA, and Prod without manual intervention.

3. Version Control

Versioning enables traceability, safe rollback, and structured collaboration. You know exactly who changed what and when.

4. Monitoring & Alerting

Real-time metrics, logs, and health dashboards help you spot issues early and reduce Mean Time to Detect (MTTD) and Mean Time to Resolve (MTTR).

5. Redundancy & High Availability

Running NiFi in a clustered mode with load balancing ensures that a single failure doesn’t take the whole pipeline down.

How Apache NiFi Contributes to Resilience

Apache NiFi excels in visual data flow design, connection queueing, and built-in retry mechanisms. It supports flow provenance, which provides visibility into data lineage, a critical feature for debugging and compliance.

However, on its own, NiFi does not provide:

- Seamless promotion of NiFi data flows between environments

- Centralized flow lifecycle management

- Automated rollback

- Centralized audit logs for NiFi data flow changes

To truly achieve enterprise-grade resilience, these capabilities must be layered on top of NiFi, often through additional tools or custom scripts.

Strategies for Enhancing Business Continuity with NiFi

Implementing the following strategies can dramatically improve your data system’s resilience:

- Design Idempotent Flows: Ensure your processors and data operations can safely be retried without duplicating data or introducing inconsistencies.

- Use Parameter Contexts Smartly: Separate config from code. Parameter contexts let you maintain a consistent flow of logic while adapting configurations per environment.

- Automate Snapshots and Backups: Regularly back up flow definitions and configurations so you can quickly restore them in case of failure.

- Implement CI/CD for Data Flows: Bring DevOps practices into the data world. Automate testing, validation, and promotion using Git-based workflows or orchestration tools.

- Deploy Across Clusters: Use NiFi’s clustering capabilities and run failover-ready environments for high availability.

Conclusion: Future-Proofing Your Data Pipelines

As enterprises embrace digital transformation, data becomes both an asset and a vulnerability. Without resilient pipelines, a single outage or misconfiguration can derail operations, customer experience, and revenue.

The way forward lies in automation, standardization, and observability.

This is where solutions like Data Flow Manager come into play, offering automated flow promotion, environment-aware deployments, and version-controlled rollback mechanisms on top of Apache NiFi. It empowers data teams to build robust, business-aligned, and disruption-ready data architectures without reinventing the wheel.

![]()