Key Challenges of Data Governance in Data Streaming

![]()

In today’s digital-first world, businesses are increasingly relying on real-time data streaming to drive decisions, personalize experiences, and automate operations. Whether it’s financial transactions flowing through trading platforms, sensor data from IoT devices, or live customer interactions across channels, data is moving faster than ever before.

But with this velocity comes a new set of challenges: How do you ensure data governance when data never stops moving?

Traditional governance models, designed for static, batch-processed data, simply don’t apply to streaming environments. Without strong controls, organizations face operational risks, regulatory exposure, and a loss of data trust.

Key Data Governance Challenges in Real-Time Data Streaming

Streaming data pipelines are inherently complex. They span multiple sources, systems, and transformations, often executing in milliseconds. This dynamic nature makes governing streaming data both challenging and mission-critical. Below are the key governance issues organizations must address:

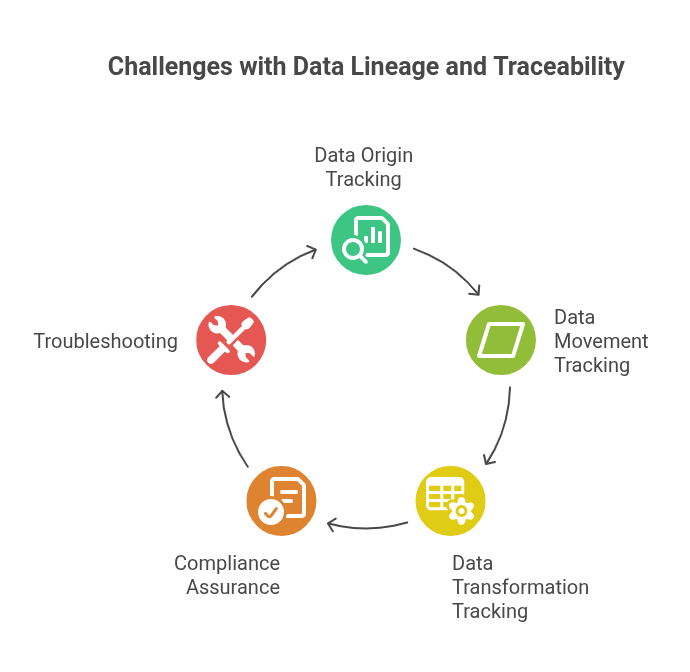

1. Data Lineage and Traceability

In real-time streaming environments, tracking the origin, movement, and transformation of data is exceptionally difficult. Unlike batch systems that log each job or transformation with a clear timestamp, streaming data flows continuously, often without persistent checkpoints.

Why it matters:

Without end-to-end lineage:

- You can’t trace where the data originated or how it was altered.

- Compliance becomes a guessing game.

- Troubleshooting and root-cause analysis are significantly hindered.

Ultimately, a lack of lineage undermines data trust, audit readiness, and accountability.

2. Ensuring Data Quality in Motion

Streaming data often arrives in unpredictable and unclean formats, such as missing fields, duplicate entries, out-of-order events, or incorrect values. Because it moves quickly and constantly, traditional batch validation techniques simply don’t apply.

Why it matters:

Poor data quality can lead to:

- Inaccurate insights and decisions.

- Broken downstream processes.

- Regulatory and reputational risks.

In high-stakes industries like finance and healthcare, data errors can be catastrophic.

Also read: How to Ensure Data Quality with Apache NiFi?

3. Access Control and Security

Streaming pipelines typically involve a wide array of interconnected systems, like databases, APIs, messaging brokers, cloud services, etc. Ensuring that only authorized users and systems access sensitive data is a serious and ongoing challenge.

Why it matters:

Without proper access control:

- Sensitive data like PII or financial records may be exposed.

- Internal users may unintentionally or maliciously alter live data flows.

- The organization becomes vulnerable to compliance violations and breaches.

4. Policy Enforcement Gaps

Governance policies, such as data retention limits, encryption standards, field masking, or schema consistency, need to be enforced uniformly across all data streams. However, in many organizations, tools and teams operate in silos, leading to fragmented enforcement.

Why it matters:

Inconsistent policy enforcement results in:

- Regulatory violations, like storing data beyond legally permitted periods.

- Operational risks, such as deploying flows with incorrect schemas.

- Audit failures, due to a lack of policy documentation or enforcement history.

5. Compliance and Regulatory Risks

Regulations such as GDPR, HIPAA, CCPA, SOX, and others require organizations to demonstrate control, transparency, and accountability in how they process data. Streaming environments, by their very nature, make it harder to log user activity, capture flow changes, and track data usage in real time.

Why it matters:

Without adequate governance structures:

- You risk non-compliance fines.

- You lack the necessary evidence for audits and legal reviews.

- You lose stakeholder trust due to a perceived lack of control.

Apache NiFi: An Ideal Platform for Real-Time Data Streaming

Apache NiFi is a powerful, open-source platform designed to automate the movement and transformation of data between systems. With its intuitive flow-based UI, robust processor library, and support for both streaming and batch pipelines, NiFi has become a go-to solution for organizations needing real-time data integration.

How NiFi Powers Streaming Data Pipelines

NiFi enables users to:

- Ingest data from diverse sources like APIs, databases, IoT devices, cloud storage, and message queues.

- Transform and enrich data on the fly using built-in processors.

- Route data to destinations such as Kafka, data lakes, analytics platforms, and cloud warehouses.

- Monitor and trace data movement through its native data provenance capabilities.

This flexibility makes NiFi ideal for building real-time data pipelines across industries, whether it’s fraud detection in banking, patient monitoring in healthcare, or logistics tracking in transportation.

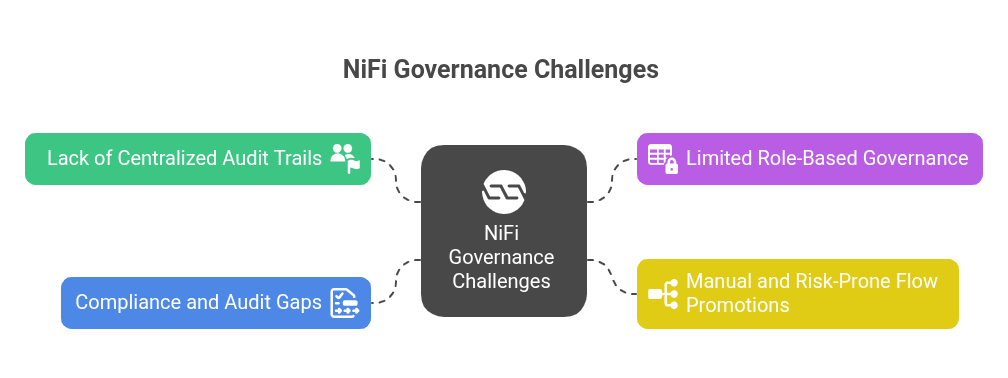

Challenges of Ensuring Data Governance in Apache NiFi

While NiFi excels in streamlining data flow, it falls short when it comes to enterprise-grade governance and control over those flows. Common challenges include:

- Lack of Centralized Audit Trails

NiFi’s provenance tracking captures data movement, but it doesn’t fully log who made flow design changes, when, or why. This makes it hard to track user activity for flow modifications.

- Limited Role-Based Governance Over Flow Changes

NiFi supports user authentication and basic access control. But it lacks granular permissions for managing flow changes, approvals, or role-specific access across environments like development, staging, and production.

- Manual and Risk-Prone Flow Promotions

Promoting data flows from one environment to another is typically manual and error-prone. This increases the risk of misconfigurations, unauthorized changes, and production issues.

- Compliance and Audit Gaps

Without structured change tracking and environment-specific controls, teams struggle to demonstrate compliance with policies or regulatory standards such as GDPR, HIPAA, or internal data governance rules.

In essence, NiFi manages the data, but not the governance of how flows are created, changed, or deployed, especially in large teams or regulated environments.

Also Read: Common NiFi Challenges Every Team Faces and How Data Flow Manager Solves Them

Data Flow Manager: The Governance Layer for Apache NiFi

To fill this gap, organizations are adopting Data Flow Manager (DFM), a purpose-built solution for seamless NiFi flow management that also adds a governance and compliance layer.

With Data Flow Manager (DFM), teams can:

- Comprehensive Flow Change Audit Trails

Every change to a flow is recorded, including who made it, when, and what was changed, providing full visibility for audits and reviews.

- Role-Based Access Control for Flow Management

Define and enforce granular permissions for viewing, editing, approving, or promoting flows. This helps implement the separation of duties and protect critical environments.

- Safe Versioning and Rollback

Maintain a clear version history of flows with the ability to compare, revert, or promote specific versions across environments (Dev → Staging → Prod).

Together, NiFi + Data Flow Manager offer the best of both worlds: real-time data streaming with enterprise-grade governance.

Final Words

As organizations increasingly rely on real-time data to drive operations and decisions, the need for robust data governance becomes more urgent than ever. Governance is no longer just about compliance; it’s about ensuring that data remains accurate, secure, and trustworthy across its entire lifecycle, even as it moves at high velocity through complex pipelines.

In streaming environments, where data is continuous and ever-changing, governance challenges like lineage tracking, access control, and policy enforcement demand thoughtful, scalable solutions. By prioritizing governance as a core pillar of their data strategy, organizations can reduce risk, improve transparency, and build a foundation of trust that supports innovation, agility, and long-term growth.

![]()